The Power of Noticing: What the Best Leaders See

The Power of Noticing: What the Best Leaders See

The Power of Noticing: What the Best Leaders See

By Max H. Bazerman

Simon and Schuster (2014)

Summarized by Bryan Turner

What if you had the ability to make better decisions and all you had to do was to make slight adjustments in how you analyze issues? Well, this ability (noticing) exists, and The Power of Noticing: What the Best Leaders See shows you how to cultivate it.

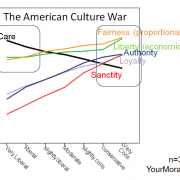

The main psychological cast of characters from this book are:

- Inattentional blindness

- Bounded awareness

- Bounded ethicality

- Systems 1 and System 2

- What you see is not all there is (WYSINATI)

- Motivated blindness

- The fallacy of auditor and creditor independence

- Change blindness

- Escalation of commitment

- Slippery-slope effect

- Omission bias

- Positive illusions

- Hyperbolic discounting

- Temporal myopia / present bias

- In-group favoritism

- Implicit discrimination

Annotated table of contents:

- Preface: Overview of the book

- Chapter 1: Going beyond our natural starting points to make better decisions

- Chapter 2: How motivated blindness affects all of us

- Chapter 3: How leaders can “overcome threats to noticing”

- Chapter 4: How entire industries can suffer from motivated blindness

- Chapter 5: How misdirection can cause failures of noticing

- Chapter 6: We’re more likely to miss unethical behavior when it occurs bit-by-bit

- Chapter 7: Learning from what isn’t said and what didn’t happen

- Chapter 8: If it’s too good to be true, there’s information you need to notice

- Chapter 9: Noticing by thinking ahead

- Chapter 10: Indirect harm gets less attention than direct harm unless…

- Chapter 11: Leadership to avoid predictable surprises

- Chapter 12: Developing the capacity to notice

PREFACE:

Overview of the chapter:

Our intuitive reactions to complex problems frequently range from suboptimal to downright wrong. Like corrective lenses that help you see better, adjusting your approach to decision making, based on insights from the behavioral sciences, can help you identify and act on the information you need to make well-thought-through decisions.

“Noticing” as it’s used in this book, is about looking beyond information and options that are immediately available and determining what’s actually necessary to make a good decision. This isn’t easy, or innately intuitive, because our internal incentives naturally present us with information in particular ways that make us miss options and make judgments based on incomplete data. Identifying when and where we’re susceptible to these predictable problems and what to do about them is the focus on of the book.

Some good quotes from the chapter are:

“Are we capable of developing skills that can overcome the natural bounds of human awareness? The answer, which I explain in this book, is yes.”

“We are generally more affected by biases that restrict our awareness when we rely on System 1 thinking [automatic, intuitive thinking] than when we use System 2 thinking [controlled, rational thinking]. Noticing important information in contexts where many people do not is generally a System 2 process.”

“Here, then, is the purpose and promise of this book: your broadened perspective as a result of System 2 thinking will guide you toward more effective decisions and fewer disappointments.”

“The Power of Noticing explains many failures in contemporary society that cause us to wonder, How could that have happened? and Why didn’t I see that coming?”

“The concept of bounded rationality and the influential field of behavioral economics have largely defined problems according to how we misuse information that is right in front of us. By contrast, noticing concerns our bounded awareness, or the systematic and predictable ways we fail to see or seek out critical information readily available in our environment.”

CHAPTER 1: RACING AND FIXING CARS

Overview of the chapter:

“Inattentional blindness” and “bounded awareness” conspire to limit the extent to which we notice. The two main types of problems that arise from failing to notice are 1) only looking at options that are immediately apparent and 2) not getting the necessary data to make an informed decision.

In order to prevent poor decisions, building on Kahneman’s diagnosis of the bias, Bazerman proposes “what you see is not all there is” (WYSINATI) to make the point that, even though we only consider information right in front of us, there are certainly other data points we need to be aware of to make smart decisions.

Some good quotes from the chapter are:

“Looking at all of the data shows a clear connection between temperature and O-ring failure and in fact predicts that the Challenger had a greater than 99 percent chance of failure. But, like so many of us, the engineers and managers limited themselves to the data in the room and never asked themselves what data would be needed to test the temperature hypothesis.”

“We often hear the phrase looking outside the box, but we rarely translate this into the message of asking whether the data before us are actually the right data to answer the question being asked.”

“Understanding what is at work when we fail to notice is crucial to understanding how we can learn to pay attention to what we’re missing.”

How to apply this to ethical systems design:

- To make better decisions, ask yourself, “What do I wish I knew?” and “What additional information would help inform my decision?” to see if you have the information necessary to make an informed decision.

- Ask yourself, “Is there another option I’m missing?” and “Could there be another option that we haven’t come up with yet?” to avoid being limited by your initial options.

- Slow down: noticing rarely happens when using System 1 so make sure for important decisions you switch to System 2 and remember that WYSINATI.

CHAPTER 2: MOTIVATED BLINDNESS

Overview of the chapter:

“The term motivated blindness describes the systematic failure to notice others’ unethical behavior when it is not in our best interest to do so. Simply put, if you have an incentive to view someone positively, it will be difficult for you to accurately assess the ethicality of that person’s behavior.”

It’s hard to believe that we are truly unable to see issues objectively. If you, like most people before looking at the data, think being aware of your preferences, and some of your biases, is sufficient to disarm their persuasive power, it turns out you’re wrong. If we have incentives for a particular outcome we’ll disregard evidence to the contrary, no matter how hard we try to be objective.

It’s hard to say this and have people believe it without some sort of experiential learning that actually demonstrates this to be the case but the research on it is sound – if you’re motivated by a particular outcome your brain will distort facts, unconsciously dismiss conflicting evidence and serve up biased evidence, as necessary, to make us comfortably reach our desired conclusion.

Convention continues to allow systems design that doesn’t take into account these inconvenient biases but the message is clear: if you want genuine impartiality, you need to have accountability systems in place, which means that the people evaluating the issue can’t have a vested interest in the outcome.

Some good quotes from the chapter are:

“Research in the field of behavioral ethics has found that when we have a vested self-interest in a situation, we have difficulty approaching that situation without bias, no matter how well-calibrated we believe our moral compass to be.”

“Motivated blindness affects most of us, including very successful and impressive people. Members of boards of directors, auditing firms, rating agencies, and others have had access to data that, from a moral standpoint, they should have noticed and acted on but did not.”

“Motivated blindness is not inevitable; in fact it is surmountable… Effective decision making, and consequently effective leadership, can hinge on overcoming motivational blindness. But how can we do so? First, we can learn to more fully notice the facts around us. Second, we can make decisions to notice and act when it is appropriate to do so. Third, we can create clear consequences for leaders when they fail to act on facts that indicate unethical behavior. Fourth, leaders can provide decision makers throughout their organization with incentives to speak up.”

How to apply this to ethical systems design:

- Don’t place people into situations with conflicts of interest. It doesn’t matter how ethical you think they are, neutrality is just not possible.

- Remember that people will do the easy thing. Placing people into situations with conflicts of interest is asking them to go against their invisible cognitive biases and its making unethical behavior the easy choice – it’s only a matter of time before that falls through, no matter how morally motivated someone is.

- If you want genuine impartiality, you need to have accountability systems in place that mean that the people evaluating the issue don’t have a vested interest in the outcome.

- To fight motivated blindness

- 1) make it easy for people to speak up

- 2) have them attain emotional distance

- 3) have them consider the opposite.

CHAPTER 3: WHEN OUR LEADERS DON’T NOTICE

Overview of the chapter:

WYSINATI but it can certainly feel like it unless you develop a process for seeing beyond the information that’s immediately available. When delinquent behavior occurs, we tend to focus on the immediately visible culprit – many times this wrongdoing is the most downstream iteration of perverse relationships and incentives – rather than the leaders who created the systems that predictably incentivized the delinquent behavior to begin with. Leaders have a unique opportunity to take a step back and identify what could be changed to prevent problems in the future.

Some good quotes from the chapter are:

“Too often boards institutionalize patterns of behavior that create blinders, and these blinders lead to their failing to notice critical information.”

“The press frequently reports on massive cheating scandals in colleges and universities, including my own. This reporting focuses on the end episode, the actions of the students. These actions are truly unfortunate, but the press underreports on the leaders – teachers and administrators – who have overlooked the conditions, norms, and incentives that create the environment for the cheating to take place.”

“Leaders often fail to notice when they are obsessed by other issues, when they are motivated to not notice, and when there are other people in their environment working hard to keep them from noticing. This chapter is about how leaders can overcome these threats to noticing.”

“If one of the four major audit firms overlooked such extreme fraud for nine years – fraud that Merrill Lynch detected in less than ten days of due diligence – it seems reasonable to question the veracity of the auditing industry.”

How to apply this to ethical systems design:

- If you’re in a leadership position, ask yourself if you have the necessary information to feel confident about a decision and what information you would need to feel more confident about a decision.

- If you identify deviant behavior that you want to see changed, don’t just focus on the most-visible manifestation of the problem, ask yourself what conditions, norms, relationships, incentives and biases allowed the problem to take place and then come up with a plan for changing them.

- Think through people’s incentives and proactively identify places with conflicts of interest or places where people need to go against their naturally incentivized patterns of behavior and design strategies to make ethical behavior in these situations easier. It’s not realistic to expect everyone to go above and beyond, when their peers can do something easier (think steroids in baseball, cheating in school and questionable data manipulation in research) and benefit from those actions.

- Identify places where ethical behavior could put people at a disadvantage and figure out how to eliminate the opportunity to benefit from unethical behavior to start with.

- “Regulators and policymakers need to consider how actors in the market can be expected to play their roles, given their self-interest – that is, how their actions can distort markets or otherwise take advantage of those who do not have a voice.”

CHAPTER 4: INDUSTRYWIDE BLINDNESS

Overview of the chapter:

One of the problems in dealing with conflicts of interest is that people think it involves a specific choice between right and wrong. It can, but far more deleterious are the effects that arise from situations where the conflict of interest powerfully, and predictably, biases how people interpret information without their noticing. These biased interpretations inevitably lead to biased outcomes, no matter how hard people try to avoid them and how inconvenient it is to believe it.

In other words, if you have an incentive to view something in a particular way, it is demonstrably impossible for you to accurately and objectively assess the information related to that decision. Your brain will be working its subconscious magic without your knowledge and because you can’t see your own biases, and you didn’t engage in obvious wrongdoing, we all mistakenly think that the conflicts of interest don’t affect us. That is, until you actually look at the studies and the data…

Telling people they should change the way they behave because of tricks of the brain that they can’t see is always hard. In this case, this is further complicated because 1) you can’t see how your biases influence how you process information (Epley called this the lens problem) 2) the pernicious problem of conflicts of interest are less about making or not making an ethical decision and more about being in situations that make neutrality impossible and 3) by commission or omission, institutionalized convention is to ignore anything but the most egregious conflicts.

Some good quotes from the chapter are:

“If rating agencies have an incentive to please the companies they assess, unbiased assessments are not possible.”

“Society is currently paying enormous costs without getting the very service that the industry claims to provide: independent audits.”

“So, what’s the problem, exactly? It is that we have institutionalized a set of relationships in which auditors have a motivation to please their clients, a state of affairs that effectively eliminates auditor independence.”

“This view of conflict of interest as exclusively intentional leads to the view that moral suasion or sanctions can prevent the destructive effects of conflict of interest. However, extensive research demonstrates that our desires influence the way we interpret information, even when we are trying to be objective and impartial… We discount facts that contradict the conclusion we want to reach, and we uncritically accept evidence that supports our positions. Unaware of our skewed information processing, we erroneously conclude that our judgments are free of bias.”

“Auditors for the sellers reached estimates of the company’s value that, on average, were 30 percent higher than the estimates of auditors who served buyers. These data strongly suggest that the participants assimilated information about the target company in a biased way: being in the role of the auditor biased their estimates and limited their ability to notice the bias in their clients’ behavior… Furthermore we replicated this study with actual auditors from one of the Final 4 large auditing firms. Undoubtedly a long-standing relationship involving millions of dollars in ongoing revenues would have an even stronger effect.”

How to apply this to ethical systems design:

- Remember that the problem with conflicts of interest is that they are less about making a clearly ethical or unethical choice and are more the impossibility of neutrality when someone has incentives for a particular outcome.

- If you really want an independent assessment of something, don’t ask people who have incentives to reach particular outcomes.

CHAPTER 5: WHAT DO MAGICIANS, THIEVES, ADVERTISERS, POLITICIANS, AND NEGOTIATORS HAVE IN COMMON?

Overview of the chapter:

This chapter is about how various forms of misdirection can make you miss important information that would have been helpful to notice. We have limited mental capabilities and so necessarily if we’re focusing on one thing we’re not focusing on another. This means that if our attention is misdirected to something that is unimportant we’re likely to miss the important information necessary for an effective decision.

Like many powerful theories, some of this sounds obvious but when looking at a categorized comparison how often do you stop to ask yourself: “are these the data I need to make a wise decision?” This chapter can help you avoid failures to notice based on misdirection.

Some good quotes from the chapter are:

“In this chapter we look at other groups of people who try to keep you from noticing what is in your best interest to notice. Successful marketers and politicians, for two examples, are often skilled at misdirection. Their jobs can depend on it. An array of common business tasks, including negotiation and working as a team, also incorporate elements of misdirection. It is safe to say that, until a majority of us can spot the misdirection of others, people in these fields will continue to have incentives to misdirect.”

“The solution is clear; in negotiation, when you think you have a deal, confirm the details rather than making inferences about ambiguously stated terms. Sometimes the ambiguity is intended to deceive.”

“Whenever we are interacting with people who we believe do not have our best interests in mind, we need to look beyond the information they put in front of us and think about what they are trying to get us to do and how we can get the information we actually need. When our goal becomes simply to notice, we can avoid the misdirection of magicians and others who borrow from their craft.”

How to apply this to ethical systems design:

- Before entering into a presentation, or decision making exercise, ask yourself in advance what data are necessary to make a wise decision. This will ensure that you notice if the data presented aren’t what you need.

- “When the other side in a negotiation makes a demand that doesn’t make sense to you, don’t assume they are acting irrationally. Instead stop and ask yourself what you might not know that could explain their actions – and whether they might be trying to misdirect you.”

- “The best advice to avoid misdirection in negotiations is to put yourself in the other person’s shoes. This practice is rarely done, and it’s all the more important when we are interacting with people who may not have our best interests in mind.”

- “Critically, issue-by-issue agendas can create misdirection for both negotiators by distracting them from a discussion format that would lead to the discovery of such trades.”

- When making group decisions, keeping in mind that groups focus on shared information rather than information held by only a few people, ask people to speak up and let you know about any additional relevant information that hasn’t been brought up yet.

CHAPTER 6: MISSING THE OBVIOUS ON A SLIPPERY SLOPE

Overview of the chapter:

If you were talking with a stranger and then a few people passed between you, interrupting your conversation, you’d notice if there was a different stranger standing in the place of the original one wouldn’t you? Well, actually, about 50% of people didn’t according to this study. If half of the people don’t notice something as obvious as the person they’re talking with being replaced, is it possible you might be missing out on some important information in decision making?

Humans are good at detecting abrupt changes in stimuli but not as good at noticing slow trends that evolve over time. This holds true in a lot of areas, including unethical behavior. In many instances people responsible for large crimes, who in retrospect blatantly disregarded laws, started off with a minor transgression – that others failed to notice – which then snowballed over time. Mix in a few cognitive biases (overconfidence and escalation of commitment) and before you know it you have a recipe for massive criminality.

Some good quotes from the chapter are:

“The slippery-slope effect, we believe, helps to explain why watchdogs, boards, of directors, auditors, and other relevant third parties fail to notice the unethical actions of those they are assigned to monitor.”

“And in most cases there was evidence that the executives involved planned to correct the manipulations in the following period or so… The firms followed a slippery slope of fraudulent reporting, with earnings management becoming more aggressive, eventually providing the evidence needed for SEC sanctions. This pattern often occurred without the firm’s auditors or board of directors even noticing it.”

“Because overconfidence can prevent us from noticing the downside to our actions, it can trigger wars that never should have been started, labor strikes that create no net benefit to either side, baseless lawsuits, failed companies that should not have been launched and the failure of government representative to reach economically efficient deals.”

“Psychological research on the phenomenon of escalating commitment shows that decision makers who commit themselves to a particular course of action have a tendency to make subsequent decisions that continue that course of action.”

How to apply this to ethical systems design:

- Don’t wait for big transgressions to act. If you nip unethical behavior in the bud, you’ll be preventing the slippery-slope effect. Remember, very few people start out with the intention of committing massive fraud; it’s more frequently the result of a slippery slope.

- If something is too good to be true it probably is. That should be a signal to “notice” what’s not right; look for abnormalities.

- Beware the escalation of commitment: if something is going poorly, understand you have a strong internal incentive to sink more resources into it in the hopes that it goes better, even when it might be a terrible decision.

- Beware the overconfidence bias: seek out disconfirming evidence (it can be easier to find than you think) especially when making large decisions.

CHAPTER 7: THE DOG THAT DIDN’T BARK

Overview of the chapter:

Sometimes, the most useful information can come from what isn’t said. Cultivating the ability to infer lessons from information that is not presented or immediately visible can help you notice what’s needed to make better decisions. This, however, requires practice and System 2 thinking.

Some good quotes from the chapter are:

“Here again, noticing what did not happen is key to making the right decision. The lesson: whenever something seems too good to be true, it is often useful to consider what events did not happen. We need to notice the dogs that don’t bark in addition to those that do.”

“Analyzing what didn’t happen in a given situation is a very difficult cognitive task; it is not intuitive for us to think this way. However, this kind of thinking is particularly valuable in strategic contexts, where it is critical to think about the decisions of others.”

“How do you notice missing information? The answer is to learn Holmes’s trick of hearing the dog that didn’t bark. This is a System 2 exercise. It requires that we learn to think about what normally will occur and notice when that does not take place.”

How to apply this to ethical systems design:

- Slow down, use your System 2, and ask yourself “what didn’t happen?” or “what did they not do?” to see what additional information you can turn up.

- When doing strategic thinking, ask your team how the omission bias could make you focus too much on some outcomes, not enough on others and what to do about it.

CHAPTER 8: THERE’S SOMETHING WRONG WITH THIS PICTURE: OR, IF IT’S TOO GOOD TO BE TRUE…

Overview of the chapter:

“Too many of us leap at the prospect of 20 percent returns year in and year out, or a $40 MacPro laptop, rather than ponder how such improbable promises are being delivered.”

We’ve all heard the admonition “if it’s too good to be true, then it probably is” but how often do we heed it? This chapter explains why we’re persuaded by overly-optimistic thinking and makes a compelling case that if something is too good to be true, we probably need to do a better job of noticing.

Some good quotes from the chapter are:

“Successful decision makers take note of environments where inaction is the right course.”

“Bidders exhibit three common mistakes. First, they fail to follow the common advice to put yourself in the other guy’s shoes. In the $100 bill auction – in fact in any auction – it is essential to think about the other bidders’ motivations… Second, people who plan on making only a limited number of bids in an auction often bid past their self-imposed limit… Third, like Swoopo bidders, once in an auction, most of us feel an irrational desire to win.”

“Why didn’t they notice? Many didn’t want to notice; they suffered from positive illusions, or the tendency to see the world the way we would like it to be.”

How to apply this to ethical systems design:

- “Anytime you hear about something that seems too good to be true, you should be skeptical.”

- When making a deal, consider what information the other side has and what you could be missing.

- Beware of positive illusions. We want to be right and we want things to work out in our favor; that’s natural. However, to prevent it from perverting our decision making, take the outside view (as Kahneman puts it) or use reference class forecasting to see when you’re being overly optimistic.

CHAPTER 9: NOTICING BY THINKING AHEAD

Overview of the chapter:

We give too much weight to the present, we fail to consider how others will perceive our choices and we generally fail to consider the impact of our decisions within the larger chain of events of which they are a part. All of these can lead to suboptimal decisions. Thinking one step ahead can prevent poor decisions, identify information asymmetries that we need to be mindful of and help us understand whether to be cynical or optimistic about our counterparts in negotiations. Like many tools of noticing, this is a skill that can be developed.

Some good quotes from the chapter are:

“They systematically exclude readily available information – that the seller is privileged to information that they are not – from their decision processes. Like people who buy expensive jewelry without an independent appraisal, or out-of-town home buyers who trust their bank to appraise the value of the home, or acquirers in the merger market, a majority of bidders fail to account for the asymmetric relationships that could negatively affect them. They fail to realize that their outcome is conditional on acceptance by the other party and that acceptance is most likely to occur when it is least desirable to the negotiator making the offer.”

“The ‘Acquiring a Company’ problem pits intuition against more systematic analysis. And once again we see important limitations of intuitive, System 1 thinking. We automatically rely on simplifying tools, but we have the potential to use rational System 2 processes to more fully anticipate the decisions of others and identify the best response.”

“In some situations it is fairly costless to collect additional information to test our intuition, but we often fail to do so. Your goal should be to understand the strategic behavior of others without destroying opportunities for trust building.”

How to apply this to ethical systems design:

- Ask yourself how the information you’re presenting is likely to be received.

- Are you engaging in hyperbolic discounting and discounting the future too much for the sake of the present?

- Ask if this is the right decision for now and the future.

CHAPTER 10: FAILING TO NOTICE INDIRECT ACTIONS

Overview of the chapter:

“Reflection clarifies the obvious role of indirect actors in influencing the direct actors toward ethical misconduct.”

It’s always hard to determine where responsibility lies in complex situations. Unsurprisingly, people attribute blame more to direct actions than indirect actions even though either can make people equally culpable. Examining the role of direct actor, alongside the indirect actor, however can expose indirect unethical acts for what they really are.

Some good quotes from the chapter are:

“But as we will see throughout this chapter, people fail to hold organizations accountable when they are the indirect cause of harm. By definition, indirect harm often goes unnoticed and is particularly hard for people – manufacturers, retailers, and consumers – to see.”

“The logic behind adding this third condition is that our past research has found that people act more rationally and reflectively when they compare two or more options at the same time. We found that when participants were able to compare the two scenarios, they reversed the preferences of those who looked at just one option and judged Action B to be more unethical than Action A. In short, when the indirect action was brought to their attention – when they were made to notice – they saw the reasonable causal link and assigned blame accordingly.”

“For decades, goal setting has been promoted as an effective means of managing and directing employees. Goals work well in many contexts, but it is critical to think about – and to notice – their broader impact. In particular, organization leaders have a responsibility to think through the indirect effects of goals.”

“The harms created by indirect effects are a leadership challenge. Leaders need to think beyond the moment to anticipate the problems that their organization’s procedures could create.”

How to apply this to ethical systems design:

- To unearth the real ethicality of a decision, assess the role of the direct and indirect actors simultaneously. This will avoid the commonplace reaction of blaming the direct actor while absolving the indirect actor (who frequently creates the situation to begin with) of responsibility.

- Focusing on one thing necessarily lessens focus on another (think of the Walmart, Staples and Sears examples). Ensure that you’re not indirectly promoting unethical behavior by creating lopsided focus on one aspect of a decision to the detriment of another important one.

CHAPTER 11: LEADERSHIP TO AVOID PREDICTABLE SURPRISES

Overview of the chapter:

“Predictable surprises are a unique and significant consequence of the failure to notice important information and the failure to lead based on what you notice.”

Many of the large setbacks our organizations experience can be anticipated. Creating the structures, and cultivating the ability, to determine what information you need to notice impending disasters and when to act, is a significant leadership challenge. However, learning to successfully navigate these challenges will pay substantial dividends for your organization.

Some good quotes from the chapter are:

“A predictable surprise occurs when many key individual are aware of a looming disaster and understand that the risk is getting worse over time and that conditions are likely to eventually explode into a crisis, yet they fail to act in time to prevent the foreseeable damage.”

“Why do leaders typically fail to anticipate predictable surprises? The answer to this question is multifaceted: predictable surprises have cognitive, organizational, and political causes.”

“A number of cognitive biases cause us and our leaders to downplay the importance of coping with a predictable surprise. First we view the world in a more positive light than is warranted… Second, we tend to overly discount the future… Third, people, organizations, and nations tend to follow the rule of thumb ‘Do no harm.’”

“Many predictable surprises can also be attributed in part to a small number of individuals and organizations corrupting the system for their own benefit. Consider the failure of the United States to enact meaningful campaign finance reform, which has created an election environment that much of the world would consider to be legalized corruption.”

How to apply this to ethical systems design:

- Check to see if positive illusions, hyperbolic discounting and indirect versus direct action could be negatively influencing your decision making.

- Establish accountability for externalities; without clear accountability people are more likely to follow the path of least resistance and “just do their job.”

- To break out of silos, create systems that allow information to flow across channels so that you can identify emerging threats. Conduct cost benefit analyses to determine if you should act on them (though beware of ethical fading) and then act as appropriate.

- “Anticipating and avoiding predictable surprises requires leaders to take three critical steps: recognize the threat, prioritize the threat, and mobilize the resources required to prevent the predictable surprise.”

CHAPTER 12: DEVELOPING THE CAPACITY TO NOTICE

Overview of the chapter:

“This closing chapter offers some final advice on how you can improve your ability to notice and help others notice important information.” This chapter synthesizes the preceding chapters with a bent towards implementation in practice.

Becoming a better noticer can seem hard in the real world because talking about known unknowns, unknown unknowns and what to do about them can be a little abstract. But if you can summon the grit necessary to overcome the initial cognitive resistance to implement the lessons from this book, you’ll be a much better decision maker for it. Remember, noticing is a skill that requires practice.

Some good quotes from the chapter are:

“A company, sometimes an entire industry, can be full of smart executives who have accepted the constraints about how things are done. But outsiders are more likely to notice when a dysfunctional constraint has been placed on the system.”

“The insider/outsider distinction suggests an additional strategy for noticing more: invite an outsider to share his or her insights.”

“In the areas of financial reporting, for example, external auditing systems have been designed in a way that demotivates auditors from doing the one thing that they are in business to do: notice when their clients’ books are off. Instead auditors’ primary incentives are to keep their customers happy, get rehired, and be hired to provide nonaudit services.”

“Organizations institute systems, including organizational structures, rewards systems, and information systems that affect what their employees will pay attention to – and what they will overlook.”

How to apply this to ethical systems design:

- “First-class noticers move beyond faulty intuition examine closely the relevant data. So, to become the Billy Beane of your industry, the question you should be asking yourself is this: What conventional wisdom prevails in my industry that deserves to be questioned?”

- Take the outside view. Do it yourself by asking how things have gone in the past for others in similar situations and ask someone outside your industry (who doesn’t have the same theoretical starting point) what they think to get a wider perspective.

- “Leaders should audit their organizations for features that get in the way of noticing.”